Confusion Matrix

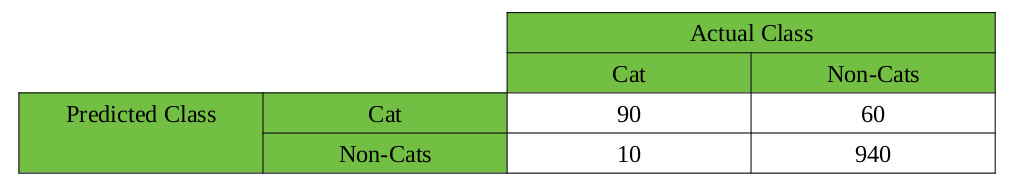

Let’s say we have a binary classifier cats and non- cats, we have 1100 test images, 1000 non cats, 100 cats. The output of the classifier is either Positive which means “cat” or Negative which means non-cat. The following is called confusion matrix:

How to interpret these term is as follows: Correctness of labeling, Predicted Class

True Positive:

Observation is positive, and is predicted to be positive.90 cats correctly labeled.

True Negative:

Observation is negative, and is predicted to be negative. 940 images labeled as non-cats, and they are non-cats.

False Positive:

Observation is negative, but is predicted positive. 60 non-cat images labeled cats, but they are cats.

False Negative:

Observation is positive, but is predicted negative.10 images labels as cat, but they are truly non-cats.

Accuracy:

Accuracy is defined as the number of correct predictions divided by the total number of predictions. Classification accuracy= (90+940)/(1000+100)= 1030/1100= 93.6%

Precision:

Precision is not always a good indicator for the performance of our classifier. If one class has more frequency in our set, and we predict it correctly while the classifier wrongly label the smaller class, accuracy could be very high but the performance o the classifier is bad so:

Precision= True Positive/ (True Positive+ False Positive)

Precision cat= 90/(90+60) = 60%

Precision non-cat= 940/950= 98.9%

Recall

Recall is the ratio of the total number of correctly classified positive examples divide to the total number of positive examples, kind of optimistic classifier.

Recall= True Positive/ (True Positive+ False Negative)

Recall cat= 90/100= 90%

Recall non-cat= 940/1000= 94%

High recall, low precision: This means that our classifier finds almost all positive examples in our test set but also recognizes a lot of negative examples as positive examples.

Low recall, high precision: This means our classifier is very certain about positive examples (if it has labeled as positive, with high confident it is positive) meanwhile our classifier has missed a lot of positive example, kind of conservative classifier.

F1-Score

Depending on application, you might be interested in a conservative or optimistic classifier. But sometimes you are not biased toward any of the classes in your set, so you need to combine precision and recall together. F1-score is the harmonic mean of precision and recall:

F1-score= 2*Precision*Recall/(Precision+Recall)

F1-score cat= 2*0.6*0.9/(0.6+0.9)= 72%

Sensitivity and Specificity

Sensitivity and specificity are two other popular metrics mostly used in medical and biology. Basically computing recall for both positive and negative classes.

Sensitivity= Recall= TP/(TP+FN)

Specificity= True Negative Rate= TN/(TN+FP)

Receiver Operating Characteristic (ROC) Curve

The output of a classifier is usually a probabilistic number, and we based on a cut off value decided to accept or reject the value. ROC curve is plotting TPR against FPR for various threshold values. ROC curve is a popular curve to look at overall model performance and pick a good cut-off threshold for the model.

False Positive Rate

High values means: False Positive > True Negative which means our classifier labels many examples as Positive while they are Negative and this ratio is bigger than the examples that are actually Negative and correctly labeled as Negative.

Small value means True Negative > False Positive which means our classifier truly labels examples that are negative and the ratio is bigger than the examples that are Negative and classifier labels them as Positive.

False Positive Rate=1-Specificity=False Positive/ (False Positive + True Negative)

True Positive Rate

True Positive Rate = Sensitivity= Recall= True Positive /(True Positive +False Negative)

Area Under the Curve (AUC)

Sørensen–Dice Coefficient

Confident Interval