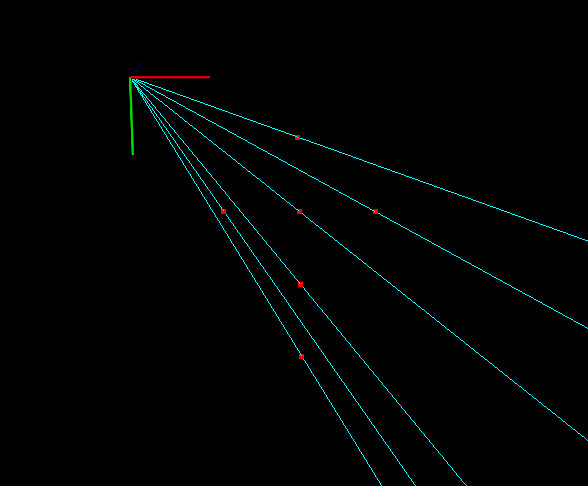

Projection of Points from 3D in the camera plane:

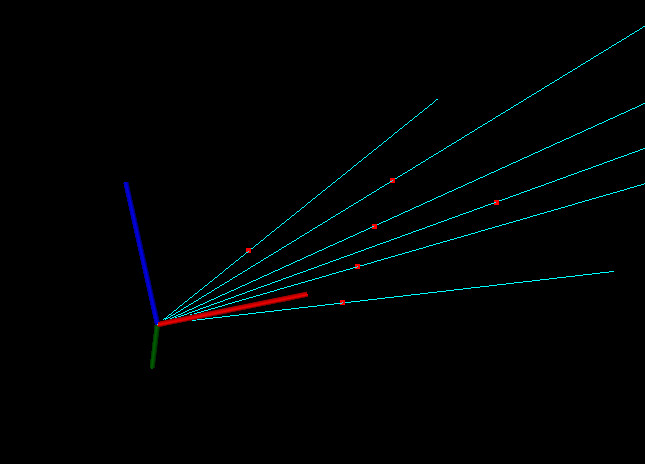

Computed rays from the camera origin in the direction of points:

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 56 57 58 59 60 61 62 63 64 65 66 67 68 69 70 71 72 73 74 75 76 77 78 79 80 81 82 83 84 85 86 87 88 89 90 91 92 93 94 95 96 97 98 99 100 101 102 103 104 105 106 107 108 109 110 111 112 113 114 115 116 117 118 119 120 121 122 123 124 125 126 127 128 129 130 131 132 133 134 135 136 137 138 139 140 141 142 143 144 145 146 147 148 149 150 151 152 153 |

void virtualCameraSimulator(int argc, char ** argv) { int numberOfPixelInHeight,numberOfPixelInWidth; double heightOfSensor, widthOfSensor; double focalLength=0.5; double mx, my, U0, V0; numberOfPixelInHeight=600; numberOfPixelInWidth=600; heightOfSensor=10; widthOfSensor=10; my=(numberOfPixelInHeight)/heightOfSensor ; U0=(numberOfPixelInHeight)/2 ; mx=(numberOfPixelInWidth)/widthOfSensor; V0=(numberOfPixelInWidth)/2; cv::Mat cameraMatrix = (cv::Mat_<double>(3,3) << focalLength*mx, 0, V0, 0,focalLength*my,U0, 0,0,1); cv::Mat distortionCoefficient= (cv::Mat_<double>(5,1) <<0, 0, 0, 0, 0); ////////////////////////////////////////////setting up virtual camera extrinsic /////////////////////////////////////////////////////// cv::Mat cameraRotation=cv::Mat::eye(3,3, CV_64F); double roll,pitch,yaw; cv::Vec3d theta; roll=0 ; pitch=0; yaw=0; theta[0]=roll; theta[1]=pitch; theta[2]=yaw; cameraRotation =eulerAnglesToRotationMatrix(theta); double tx,ty,tz; tx=0; ty=0; tz=0; cv::Mat cameraTranslation = (cv::Mat_<double>(3,1) <<tx,ty,tz); //////////////////////////////////////////projecting 3D points from world into virtual camera ////////////////////////////// std::vector<cv::Point3d> objectpoints; std::vector<cv::Point2d> projectedPoints; /* Points are in the following form (OpenCV coordinate): Z ▲ / / / 1 2 X |------------ ⯈ 1| . 2| . . . 3| . 4| . | Y ⯆ */ std::string pathToPointFile(argv[1]); io::CSVReader<3> in(pathToPointFile); in.read_header(io::ignore_extra_column, "x", "y", "z"); double x,y,z; pcl::PointXYZ point; boost::shared_ptr<pcl::PointCloud<pcl::PointXYZ> > pointsInCameraCloud(new pcl::PointCloud<pcl::PointXYZ>); while(in.read_row(x,y,z)) { objectpoints.push_back(cv::Point3d(x,y,z)); pointsInCameraCloud->push_back(pcl::PointXYZ(x,y,z)); } cv::projectPoints(objectpoints, cameraRotation, cameraTranslation, cameraMatrix, distortionCoefficient, projectedPoints); ///////////////////////////////////////////////saving the image ////////////////////////////////////////// double U,V; cv::Mat cameraImage=cv::Mat::zeros(numberOfPixelInHeight,numberOfPixelInWidth,CV_8UC1); cv::line(cameraImage,cv::Point2d(numberOfPixelInWidth/2,0),cv::Point2d(numberOfPixelInWidth/2,numberOfPixelInHeight),cv::Scalar(255,255,255)); cv::line(cameraImage,cv::Point2d(0,numberOfPixelInHeight/2),cv::Point2d(numberOfPixelInWidth,numberOfPixelInHeight/2),cv::Scalar(255,255,255)); for(std::size_t i=0;i<projectedPoints.size();i++) { V=int(projectedPoints.at(i).x); U=int(projectedPoints.at(i).y); //std::cout<<U <<"," <<V <<std::endl; cameraImage.at<char>(int(U),int(V))=char(255); } std::string fileName=std::string("image_")+std::to_string(focalLength)+ std::string("_.jpg"); cv::imwrite(fileName,cameraImage); /////////////////////////////////////////// ray tracing//////////////////////////////////////////// std::vector<cv::Point3d> directionRays; reverseProjection(distortionCoefficient , cameraMatrix, projectedPoints, directionRays); /////////////////////////////////////////// 3D visualization//////////////////////////////////////////// boost::shared_ptr<pcl::visualization::PCLVisualizer> viewer (new pcl::visualization::PCLVisualizer ("3D Viewer")); viewer->setBackgroundColor (0, 0, 0); int pointSize=5; double coordinateFrameScaleSize=1; std::string coordinateName; coordinateName="World"; int viewport = 0; viewer->addCoordinateSystem(coordinateFrameScaleSize,Eigen::Affine3f::Identity(),coordinateName,viewport); double rayScale=5; double r,g,b; r=0.0; g=1.0; b=1.0; for(std::size_t i=0;i<directionRays.size();i++) { std::cout<< directionRays[i].x<<","<<directionRays[i].y<<","<<directionRays[i].z <<std::endl; viewer->addLine(pcl::PointXYZ(0 ,0 , 0), pcl::PointXYZ(rayScale*directionRays[i].x,rayScale*directionRays[i].y,rayScale*directionRays[i].z), r, g, b, std::to_string(i+100),viewport); } int Red,Blue,Green; Red=255; Green=0; Blue=0; pcl::visualization::PointCloudColorHandlerCustom<pcl::PointXYZ > tgt_h ( pointsInCameraCloud,Red,Green,Blue ); viewer->addPointCloud<pcl::PointXYZ> (pointsInCameraCloud ,tgt_h , std::to_string(1), 0); viewer->setPointCloudRenderingProperties(pcl::visualization::PCL_VISUALIZER_POINT_SIZE, pointSize, std::to_string(1)); while (!viewer->wasStopped ()) { viewer->spinOnce (100); boost::this_thread::sleep (boost::posix_time::microseconds (100000)); } } |

points are stored in CSV file like this:

|

1 2 3 4 5 6 7 |

x,y,z 2,1, 1 1,2, 1 2,2, 1 3,2, 1 2,3, 1 2,4, 1 |

The content of “reverse_projection.hpp”

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 56 57 58 59 60 61 62 63 64 65 66 67 68 69 70 71 72 73 74 75 76 77 78 79 80 81 82 83 84 85 86 87 88 89 90 91 92 |

/////////////////////////////////////////implementing OpenCV undistortPoints/////////////////////////////////////// cv::Mat imagePointMatrix=cv::Mat_<double>(3,1); cv::Mat imageRayMatrix=cv::Mat_<double>(3,1); std::cout<<"===============Inverse of Camera Matrix(OpenCV)==========================="<<std::endl; std::cout<<cameraMatrix.inv()<<std::endl; std::cout<<"=====================Inverse of Camera Matrix====================="<<std::endl; cv::Mat inv=(cv::Mat_<double>(3,3)<< cameraMatrix.at<double>(1,1) ,0,-cameraMatrix.at<double>(1,1)*cameraMatrix.at<double>(0,2) ,0,cameraMatrix.at<double>(0,0),-cameraMatrix.at<double>(1,2)*cameraMatrix.at<double>(0,0) ,0,0,cameraMatrix.at<double>(0,0)*cameraMatrix.at<double>(1,1)); std::cout<<inv/(cameraMatrix.at<double>(1,1)*cameraMatrix.at<double>(0,0))<<std::endl; std::cout<<"=====================Computed Rays===================== "<<std::endl; for(std::size_t i=0;i<imagePointsInCamera.size();i++) { imagePointMatrix.at<double>(0,0)=imagePointsInCamera[i].x; imagePointMatrix.at<double>(1,0)=imagePointsInCamera[i].y; imagePointMatrix.at<double>(2,0)=1.0; imageRayMatrix=cameraMatrix.inv()*imagePointMatrix; std::cout<<imageRayMatrix <<std::endl; } /* (u,v) is the input point, (u', v') is the output point camera_matrix=[fx 0 cx; 0 fy cy; 0 0 1] P=[fx' 0 cx' tx; 0 fy' cy' ty; 0 0 1 tz] x" = (u - cx)/fx y" = (v - cy)/fy (x',y') = undistort(x",y",dist_coeffs) [X,Y,W]T = R*[x' y' 1]T x = X/W, y = Y/W only performed if P=[fx' 0 cx' [tx]; 0 fy' cy' [ty]; 0 0 1 [tz]] is specified u' = x*fx' + cx' v' = y*fy' + cy', */ std::vector<cv::Point2d> imagePointsInCameraNormalizedCoordinate; cv::undistortPoints(imagePointsInCamera,imagePointsInCameraNormalizedCoordinate,cameraMatrix,distortionCoefficient); std::vector<cv::Point3d> imagePointsInCameraNormalizedCoordinateHomogeneous; cv::convertPointsToHomogeneous(imagePointsInCameraNormalizedCoordinate,imagePointsInCameraNormalizedCoordinateHomogeneous); cv::Mat imagePointsInCameraNormalizedCoordinateHomogeneousMatrix=cv::Mat(imagePointsInCameraNormalizedCoordinateHomogeneous); imagePointsInCameraNormalizedCoordinateHomogeneousMatrix=imagePointsInCameraNormalizedCoordinateHomogeneousMatrix.reshape(1).t(); double a,b,c; std::cout<<"=====================OpenCV Rays=====================" <<std::endl; for(int i=0;i<imagePointsInCameraNormalizedCoordinateHomogeneousMatrix.cols;i++) { a=imagePointsInCameraNormalizedCoordinateHomogeneousMatrix.at<double>(0,i); b=imagePointsInCameraNormalizedCoordinateHomogeneousMatrix.at<double>(1,i); c=imagePointsInCameraNormalizedCoordinateHomogeneousMatrix.at<double>(2,i); std::cout<<a <<std::endl; std::cout<<b <<std::endl; std::cout<<c <<std::endl; directionRays.push_back(cv::Point3d(a,b,c)); } /* The inverse of a 3x3 matrix: | a11 a12 a13 |-1 | a21 a22 a23 | = 1/DET * A^-1 | a31 a32 a33 | with A^-1 = | a33a22-a32a23 -(a33a12-a32a13) a23a12-a22a13 | |-(a33a21-a31a23) a33a11-a31a13 -(a23a11-a21a13)| | a32a21-a31a22 -(a32a11-a31a12) a22a11-a21a12 | and DET = a11(a33a22-a32a23) - a21(a33a12-a32a13) + a31(a23a12-a22a13) Camera Matrix: |fx 0 cx| |0 fy cy| |0 0 1 | Rays are A^-1*p: 1 |fy 0 -fycx| |u| ----- |0 fx -cy*fx| *|v| = [ (u- cx)/fx, (v-cx)/fy, 1] fx*fy |0 0 fy*fx| |1| */ |

The content of “transformation.hpp“

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 56 57 58 59 60 61 62 63 64 65 66 67 68 69 70 71 72 73 74 75 76 77 78 79 80 81 82 83 84 85 86 87 88 89 90 91 92 |

#include <opencv2/opencv.hpp> #include <Eigen/Eigen> cv::Mat eulerAnglesToRotationMatrix(cv::Vec3d &theta) { // Calculate rotation about x axis cv::Mat R_x = (cv::Mat_<double>(3,3) << 1, 0, 0, 0, cos(theta[0]), -sin(theta[0]), 0, sin(theta[0]), cos(theta[0]) ); // Calculate rotation about y axis cv::Mat R_y = (cv::Mat_<double>(3,3) << cos(theta[1]), 0, sin(theta[1]), 0, 1, 0, -sin(theta[1]), 0, cos(theta[1]) ); // Calculate rotation about z axis cv::Mat R_z = (cv::Mat_<double>(3,3) << cos(theta[2]), -sin(theta[2]), 0, sin(theta[2]), cos(theta[2]), 0, 0, 0, 1); // Combined rotation matrix cv::Mat R = R_z * R_y * R_x; return R; } bool isRotationMatrix(cv::Mat &R) { cv::Mat Rt; cv::transpose(R, Rt); cv::Mat shouldBeIdentity = Rt * R; cv::Mat I = cv::Mat::eye(3,3, shouldBeIdentity.type()); return cv::norm(I, shouldBeIdentity) < 1e-6; } cv::Vec3d rotationMatrixToEulerAngles(cv::Mat &R) { assert(isRotationMatrix(R)); float sy = sqrt(R.at<double>(0,0) * R.at<double>(0,0) + R.at<double>(1,0) * R.at<double>(1,0) ); bool singular = sy < 1e-6; // If float x, y, z; if (!singular) { x = atan2(R.at<double>(2,1) , R.at<double>(2,2)); y = atan2(-R.at<double>(2,0), sy); z = atan2(R.at<double>(1,0), R.at<double>(0,0)); } else { x = atan2(-R.at<double>(1,2), R.at<double>(1,1)); y = atan2(-R.at<double>(2,0), sy); z = 0; } return cv::Vec3f(x, y, z); } void transformPoints(cv::Mat rotation,cv::Mat translation, std::vector<cv::Point3d> &inputPoints,std::vector<cv::Point3d> &outputPoints) { std::vector<cv::Point3d> pointsInLeftCamera; for(std::size_t i=0;i<inputPoints.size();i++) { cv::Point3d X=cv::Point3d(inputPoints[i].x ,inputPoints[i].y, inputPoints[i].z ); cv::Mat X_mat=cv::Mat(X); cv::Mat pointInCameraMatrix=rotation*X_mat+translation; cv::Point3d pointInCameraCoordinate= cv::Point3d( pointInCameraMatrix.at<double>(0,0),pointInCameraMatrix.at<double>(1,0), pointInCameraMatrix.at<double>(2,0) ); outputPoints.push_back(pointInCameraCoordinate); } } Eigen::Matrix3d eulerAnglesToRotationMatrix(double roll, double pitch,double yaw) { Eigen::AngleAxisd rollAngle(roll, Eigen::Vector3d::UnitX()); Eigen::AngleAxisd pitchAngle(pitch, Eigen::Vector3d::UnitY()); Eigen::AngleAxisd yawAngle(yaw, Eigen::Vector3d::UnitZ()); Eigen::Quaternion<double> q = yawAngle * pitchAngle * rollAngle; Eigen::Matrix3d rotationMatrix = q.matrix(); return rotationMatrix; } |